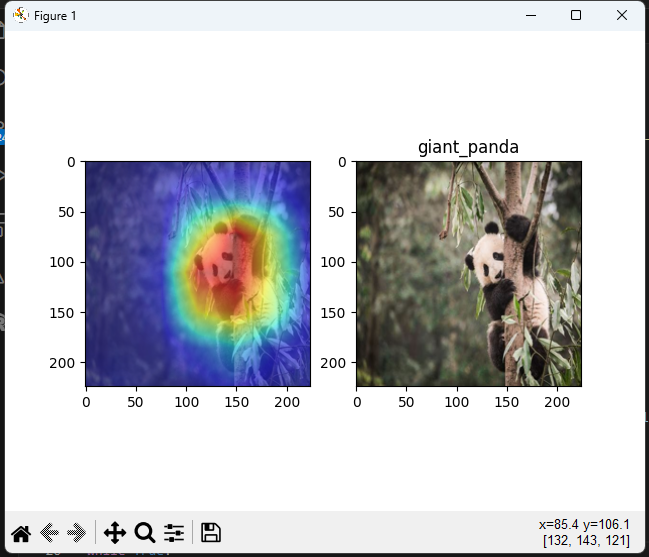

This weekend I set out to make what I refer to as AI heatmaps which is created when an image classification model predicts a class we also track what pixels in the image helped influence it’s prediction. I tried to use pytorch but after lots of code and lots of error I put a temporary pause and explored other libraries. I ended up using keras and resnet for my class activation mapping python script by following along with a video.

Although I think this looks like a heatmap I think that the proper name is a class activation map or CAM. Maybe I will refer to it as AI heatmaps anyway.

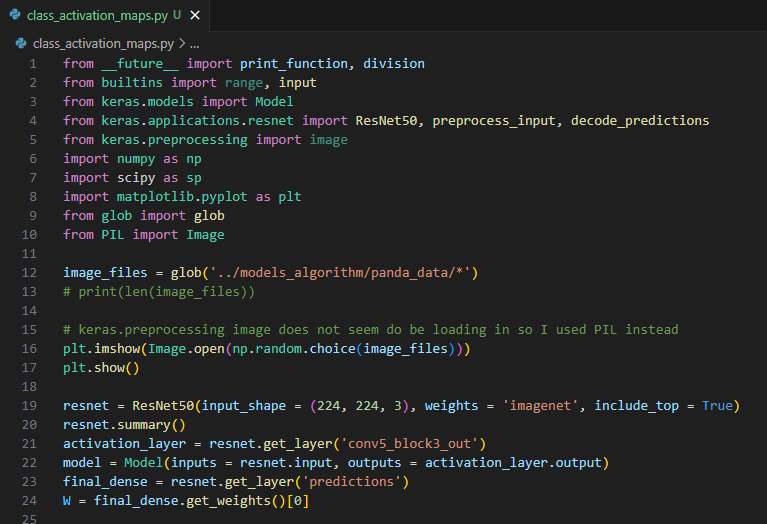

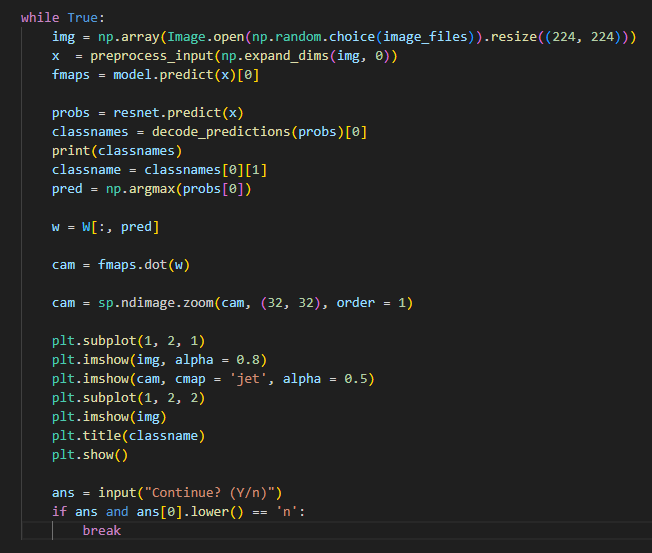

From this video I got a majority of the code but some of the libraries were outdated so I had to use the pillow library for images rather than keras images. Resnet also seemed to change the way you could call layers so I had to change the call to the exact name of the layer which could be really inefficient if the amount of layers change. Otherwise the code as of right now is pretty much the same.

I want to alter this code a little however. The classifiers and models are not the ones we built, so I think it would be pretty cool if we could implement our housing models into this method to see how well the models are operating with visual examples. Knowing that our models are not very accurate this could help us to know what specific features we need more images of or which features give the model false guesses.